As a technology pioneer, Elon Musk is hardly anyone’s idea of a luddite. So when Musk tweeted that artificial intelligence competition with Russia and China would be the “most likely cause” of World War III, it got people pretty worked up. However, the controversy over AI isn’t new — for Musk or society at large.

Artificial Intelligence: Terrifying From the Very Beginning

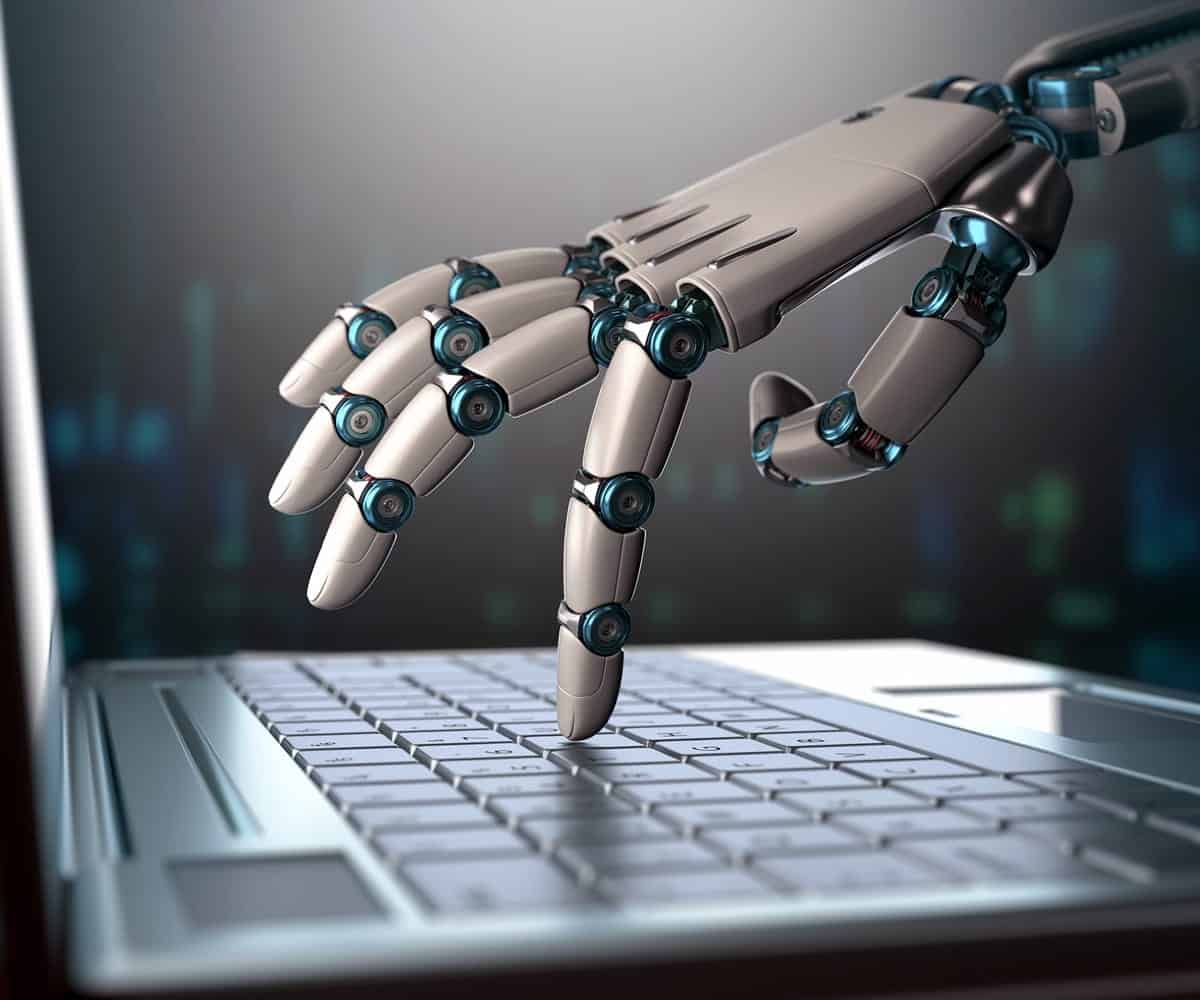

We were worried about the perils of creating an artificial being long before AI became a science. The term “robot” comes from a play written in 1920 by Karel Čapek called R.U.R — Rossum’s Universal Robots (Rossumovi Univerzální Roboti in the original Czech). The play starts in a factory that manufactures synthetic people to serve as workers. The robots are initially created without human emotions, but as more human robots are developed, they do what… well, what an army of sentient robots generally does. Bye bye, humanity.

It’s an idea we’ve seen so many times that it’s become a cliche. The stories range from mad AIs who threaten and kill the humans they’re supposed to protect (think HAL in 2001) to a total war to end humanity (SKYNET in the Terminator series), but when computerized beings become sentient, the story usually ends badly for the humans involved.

But it’s not just a science fiction trope — some modern scientists have similar worries about artificial intelligence. Stephen Hawking has said that “the rise of powerful AI will be either the best, or the worst thing, ever to happen to humanity. We do not yet know which.”

Do Robots Dream of Electric World Domination?

On the extreme end of the spectrum, Hawking doesn’t beat around the bush. “The development of full artificial intelligence could spell the end of the human race,” he says. Hawking is concerned with the idea of a sentient AI. This type of intelligence could “redesign itself at an ever increasing rate,” which humans couldn’t compete with because we are “limited by slow biological evolution.”

Hawking’s concerns about the human race being superseded get a lot of press, because they seem so fantastic, but he raises another concern which is much more immediate: the potential of AI being misused by its human controllers.

“Success in creating AI will be the biggest event in the history of our civilization. But it can also be the last, unless we learn how to avoid the risks. Alongside the benefits, the AI will bring dangers, like powerful autonomous weapons, or new ways for the few to oppress the many.”

In these concerns, he’s joined by some of the most prominent thought leaders both within and outside Silicon Valley. In 2015, Hawking joined a roster of world-renowned robotics and cognitive researchers, Silicon Valley pioneers and other thought leaders to call for a ban on autonomous weapons — artificially intelligent war machines that “select and engage targets without human intervention.” The letter described autonomous weapons as “the third revolution in warfare, after gunpowder and nuclear arms,” and described enormous potential for devastation, should a new arms race occur:

“They will become ubiquitous and cheap for all significant military powers to mass-produce. It will only be a matter of time until they appear on the black market and in the hands of terrorists, dictators wishing to better control their populace, warlords wishing to perpetrate ethnic cleansing, etc. Autonomous weapons are ideal for tasks such as assassinations, destabilizing nations, subduing populations and selectively killing a particular ethnic group.”

Elon Musk is probably the most committed to preaching the dangers of artificial intelligence. He’s publicly argued with friends like Alphabet CEO Larry Page, suggesting they could be unwittingly sowing the seeds of humanity’s destruction. He’s spoken about AI arms races, and compared artificial intelligence to “summoning [a] demon.” And because of that concern, he founded OpenAI, a nonprofit to banish artificial intelligence from the earth forever.

No, sorry. We meant a nonprofit dedicated to researching and building “safe artificial general intelligence.” That doesn’t sound like something an artificial intelligence opponent would do, does it? So what’s going on?

The Current (And Slightly Less Scary) State of Artificial Intelligence

Artificial intelligence is here already — it has been for quite a while now. When Facebook automatically suggests your name in a picture, or an app on your phone predicts the next word you’ll write to save you some time typing? That’s artificial intelligence. Stephen Hawking uses artificial intelligence, too. The disease Amyotrophic Lateral Sclerosis (ALS) left him almost totally paralyzed, so he has to use small movements of his cheek to select words. He has a device that learns his speech patterns, helping him choose the correct word more easily and quickly.

Artificial intelligence is progressing rapidly, too. Digital assistants like Apple’s Siri and Google Assistant are getting better at helping you with routine tasks, from scheduling, to doing the laundry with automated appliances, to sharing your location when you go out to meet friends. Artificial intelligence is already helping to detect lung cancer, prevent car crashes, and even diagnose rare diseases that have stumped doctors.

However, artificial intelligence can also be used in troubling technologies. Facial recognition in police body cameras may help them recognize a dangerous suspect, but it could also be used to track innocent citizens. Automatic driving will continue to make transportation safer and more efficient, but it could also be used to power autonomous drones for destructive purposes, or hacked to hijack a plane. And then there’s the dreaded robot rebellion.

The question isn’t whether AI should exist or not — it already exists. And we’re not debating whether artificial intelligence is helpful or destructive, because it obviously can be either or both. The questions that matter are what kind of AI should we have, how will it be used, and who will control it.

Questions For the Future of AI

1. What Kind? Artificial General Intelligence vs. AI

Those robot rebellion scenarios are usually about Artificial General Intelligence (AGI). Garden variety AI is designed to solve specific problems, like driving a car, or detecting cancer. Artificial general intelligence is bigger — it refers to an AI that’s capable of reasoning and thinking like a person. An AGI wouldn’t necessarily have to be conscious, but it would be able to do things like a human would. That could mean using language well enough to convince a human speaker it is human (The Turing Test), going into an average home and making coffee (The Coffee Test — proposed by Apple cofounder and AI “opponent” Steve Wozniak) or even enrolling and passing a class (The Robot College Student Test).

Artificial General Intelligence is the one that people like Stephen Hawking and Elon Musk worry could replace us with another conscious species. But it’s also the type that the OpenAI institute is studying, because — despite all the sensational coverage — stopping AI isn’t really Musk’s goal.

2. Who Controls AI?

Artificial intelligence is a powerful technology, which can make humans much more effective at a wide range of tasks. One concern groups like OpenAI have is that that power will be concentrated in too few hands or the wrong hands, enabling totalitarian governments, malevolent corporations, terrorists or other bad actors to do various destructive things, up to, and including starting World War III. An open source approach evens things out, by making sure no one group is able to monopolize this technology.

Another concern that open source doesn’t answer is economic disruption. As technology develops and becomes more efficient, it reduces the amount of labor that’s needed, meaning fewer jobs. As robots and AI get more powerful, that process is going to accelerate. What happens to all those unemployed people? How do we make sure they have a reasonable standard of living and a place in society? And what should the ultimate goal be? As AI handles more and more of the work, can and should we sustain an economic model based on competition, or do we have to evolve to a more cooperative model?

3. How Should AI Be Used?

This is the most obvious question, but it’s the hardest to answer, because it requires us to acknowledge something we hate to admit: tech isn’t morally neutral — the things we design and build have consequences. Do we want to live in a world with autonomous killer robots? If not, how can we stop them from being created? Are technologies to recognize faces and track people dangerous to our freedom? If so, what limits do we need to put on them, and how can we enforce those limits?

We Need to Have a Talk About Artificial Intelligence

Artificial intelligence is already here, and it will keep advancing. We may never have a complete artificial general intelligence, but it’s a safe bet that computers will get much, much more powerful at accomplishing a wide range of tasks. That will mean promising new technologies, like cancer research and better management, but it will also lead to dangerous new risks. Whether that future is one where we live longer, happier, more fulfilling lives, or something much darker depends on the decisions we make right now.

Proto.io lets anyone build mobile app prototypes that feel real. No coding or design skills required. Bring your ideas to life quickly! Sign up for a free 15-day trial of Proto.io today and get started on your next mobile app design.

Have an opinion about the future of AI, or a promising AI app you’d like to share? Let us know by tweeting us @Protoio!